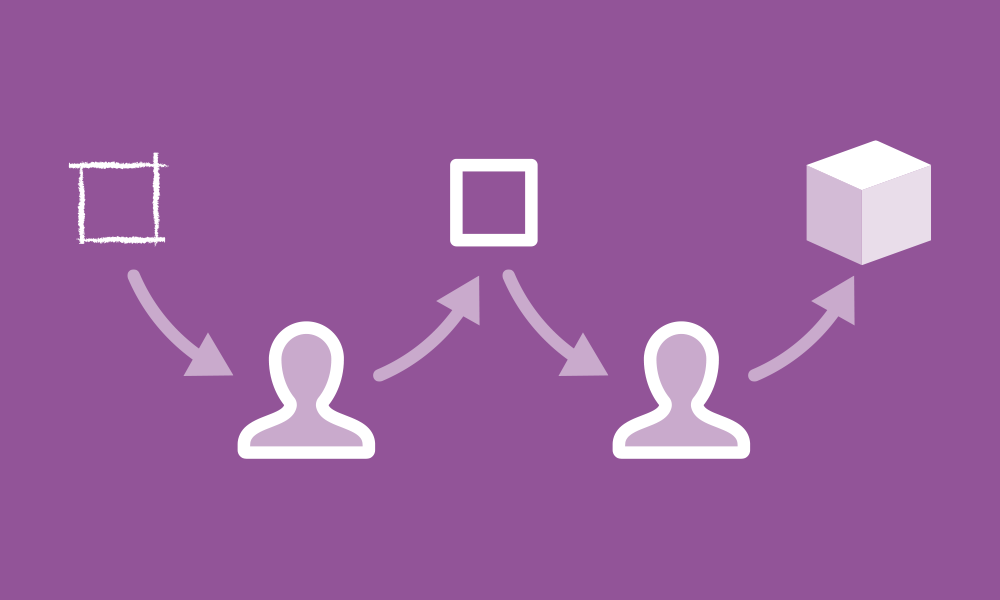

Most of the best-known usability practitioners encourage testing early and often, but it’s not always easy to know when, what and how to test. Similar to how Agile approaches development, we recommend an iterative approach to testing.

When and what to test

Start early and test often. If a current product exists, test it. What is working and what needs to be improved? Test your sitemap and taxonomy. Do your users identify with the terms and phrases you’ve established? Test your wireframes and designs. Does the layout and organization of content work? Do users understand the interactions? Test working prototypes and the site as it’s being built. Does the entire product work together as one?

How to test

In order to conduct a usability test, you’ll first need to do some upfront planning, which includes:

- Define your objective

At a high level, what are you trying to get out of this exercise? - Identify what you need to measure

What are the specific areas you need to measure to achieve your high level objective? - Create your tasks and/or questions

What task or question will help you measure those items? - Recruit testers

Who are the best people to test out your product or platform?

Once you get through the planning steps, it’s time to conduct your iterative tests. To do this, follow these steps:

- Conduct a test

- Analyze your results

- Make updates to improve usability

- Repeat

1. Conduct a test

Select your first group of users. There’s been a lot of research conducted on how many users are ideal. Neilsen says 5 users, but we recommend starting with just 3 to 4. Since these are the first tests of many you’ll be conducting, you’ll learn right away some of the areas that need improvement.

To avoid burnout and to keep the participants active, you want to keep each test as short as possible – 15-30 minutes works great! From our experience, this means 4-7 tasks per individual.

Let the individual run through the tasks at their own pace. Ask them to talk out loud as they go through the steps. Record each session. Set up a screen capture, a camera on their face and a microphone. There are many usability testing tools that will do this, but we’ve found Zoom, software intended for video conferencing, to be one of the best options that work across many platforms. From a second account, you can watch them as they proceed through the task, as well as record the session for future analysis.

2. Analyze your results

If you’re watching them go through the tasks, you’ll see areas that can be improved during the test. However, there’s still more you can learn by watching the recordings. You’ll pick up on areas that you didn’t notice during the test. Their facial and voice reactions will come to life again.

Note anything that you see the tester had trouble completing. Look for overlap between the tests. It can also be helpful to have others on the team, especially those with other expertise than you, attend the sessions or review the recordings. Their approach to reviewing the tasks are tied to their area of focus, which allows them to suggest improvements from a different perspective.

3. Make updates to improve usability

After you have a complete list, review the list with your team. What needs to happen for each item to result in a better user experience? Think of this as your backlog of features and prioritize them. Sounds simple, right? But it’s not always as easy as it sounds. This may require you to throw away a lot of what you’ve already created and start from scratch. This can hurt budget and can be hard emotionally. Just more reason to start testing early. In the end, you’ll have a better, more usable platform and you’ll save time later in the project.

4. Repeat

Revisit the planning stage where you defined the objective and measurable components. Do your tasks still make sense? Are there any you need to refine? Any to add? Any to remove? It’s always great when you can keep some of the same tasks as this can produce a measure of progress. Use your refined task list to repeat steps 1-3. What are the results now? Are the testers able to complete the tasks with more ease? Can they complete them faster? What holds up the user during this round?

In Don't Make Me Think: A Common Sense Approach to Web Usability, Steve Krug said “If you want a great site, you’ve got to test. After you’ve worked on a site for even a few weeks, you can’t see it freshly anymore. You know too much. The only way to find out if it really works is to test it.” We’ve found conducting iterative user testing is the best way to build the most valuable product we can.